矿渣P104-100魔改8G,机器学习再就业

购买理由

最近开始捣鼓TensorFlow ,训练时觉得CPU跑起来时间太长,手头只有A卡,先配置麻烦,于是就想买块N卡来跑。看了K40、K80等计算卡,参考nvidia官网GPU Compute Capability,最后因为垃圾佬的本性就在黄鱼捡了一块矿卡P104-100(号称是矿版1070,Compute Capability 6.1),矿卡本身是4G显存,可以刷Bios魔改8G显存,690元的价格觉得还能接受。就下单一张技嘉三风扇版本,结果发来是微星的低算力卡,被JS坑了一把。嫌麻烦就含泪收下了。

外观展示

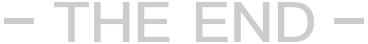

P104具体参数,

GPU-Z显示刷完bios后显存确实是8G了。这个P104据说也可以像P106一样操作来玩游戏,我用来跑机器学习就没有试,不过这些矿卡都是PCIe 1X,或许游戏带宽是问题吧。附上显卡bios,供有需要值友使用:8wx6。刷bios需谨慎。

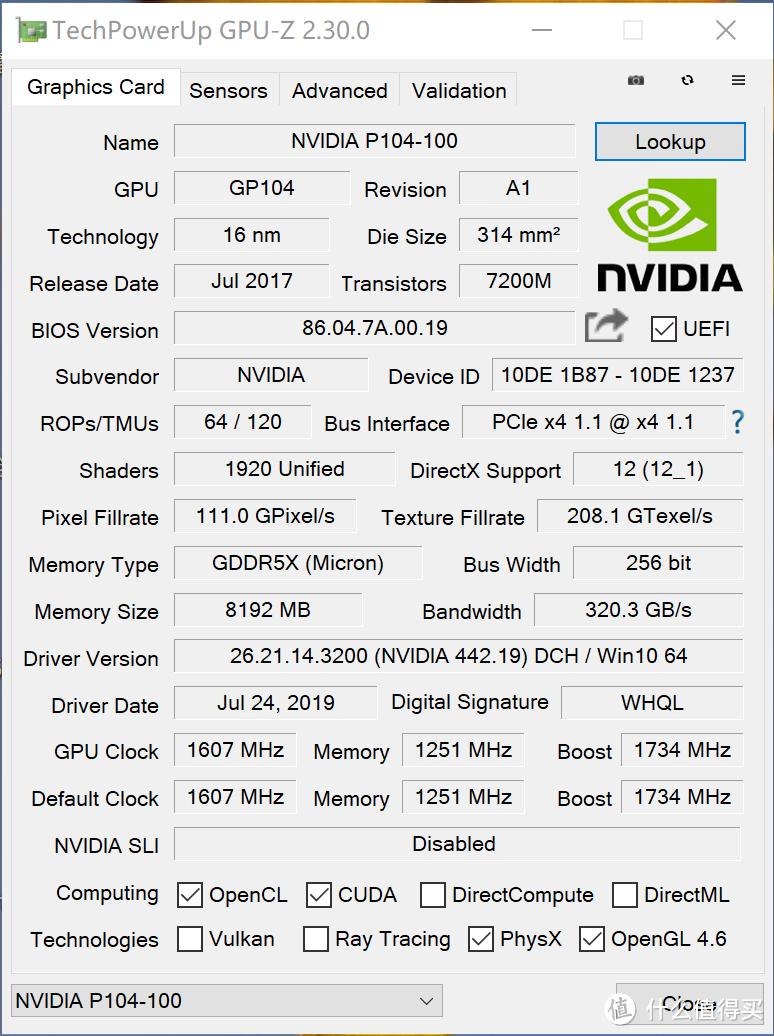

安装tensorflow-gpu环境

我使用anaconda安装tensorflow-gpu,简单给大家介绍一下步骤

下载安装anaconda,安装时注意勾选add anaconda to my PATHenvironment variable

打开cmd,输入以下命令:

conda create -n tensorflow pip python=3.7 遇到y/n时都选择y

输入命令:activate tensorflow

使用国内的源,采用pip安装输入以下命令:

pip install --default-timeout=100 --ignore-installed --upgradetensorflow-gpu==2.0.1 -i https://pypi.tuna.tsinghua.edu.cn/simple

下载并安装cuda 10.0和cudnn。将cuDNN解压。将解压出来的三个文件夹下面的文件放到对应的CUDA相同文件夹下。安装cuda 10.1有些文件需要重命名。

并在 “我的电脑-管理-高级设置-环境变量”中找到path,添加以下环境变量(cuda使用默认安装路径):

C:Program FilesNVIDIA GPU Computing ToolkitCUDAv10.0bin

C:Program FilesNVIDIA GPU Computing ToolkitCUDAv10.0libnvvp

C:Program FilesNVIDIA GPU Computing ToolkitCUDAv10.0lib

C:Program FilesNVIDIA GPU Computing ToolkitCUDAv10.0include

验证安装结果

打开cmd,输入以下命令:

activatetensorflow

再输入:

python

再输入:

importtensorflow

没有异常抛出就证明安装成功了。

性能测试

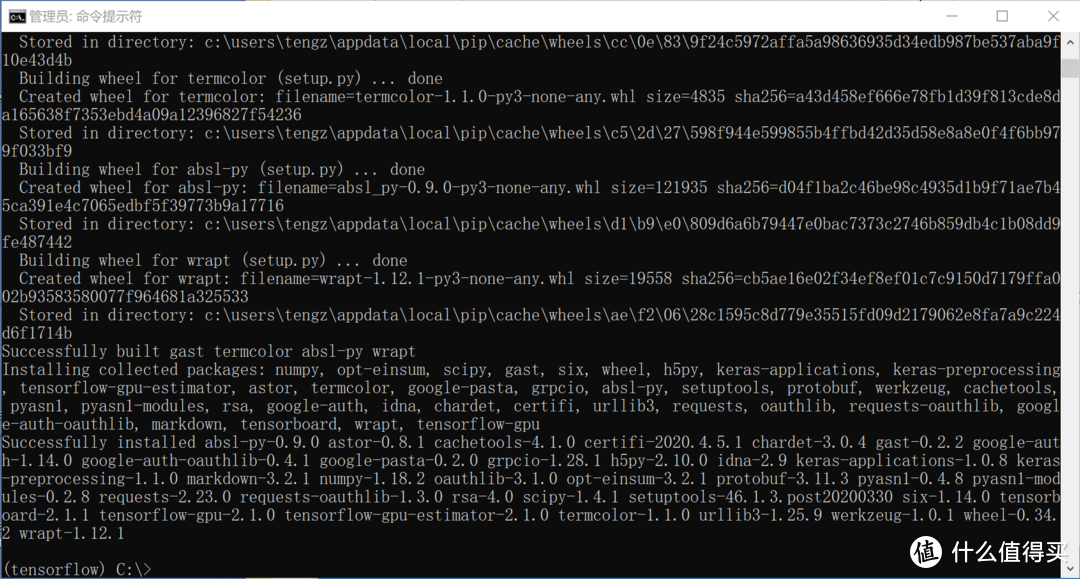

因为我的机器没有核显,平时除了P104还得再插一张显卡。所以我又买了一块2070Super官网显示compute capability: 7.5。既然买了就跟这块compute capability: 6.1的矿卡P104PK一下吧!

下面开始进行测试比较

统一运行环境win10 ,cuda 10.0,tensorflow-gpu2.1,Anaconda3-2020.02-Windows,Python3.7.7

1、先跑一下tensorflow 网站的“Hello World”

2070 SUPER

I tensorflow/core/common_runtime/gpu/gpu_device.cc:1304] CreatedTensorFlow device (/job:localhost/replica:0/task:0/device:GPU:0 with 6283 MBmemory) -> physical GPU (device: 0, name: GeForce RTX 2070 SUPER, pci busid: 0000:65:00.0, compute capability: 7.5)

Train on 60000 samples

Epoch 1/5

60000/60000 [==============================] - 7s 117us/sample -loss: 0.2996 - accuracy: 0.9123

Epoch 2/5

60000/60000 [==============================] - 6s 99us/sample -loss: 0.1448 - accuracy: 0.9569

Epoch 3/5

60000/60000 [==============================] - 5s 85us/sample -loss: 0.1068 - accuracy: 0.9682

Epoch 4/5

60000/60000 [==============================] - 6s 101us/sample -loss: 0.0867 - accuracy: 0.9727

Epoch 5/5

60000/60000 [==============================] - 6s 96us/sample -loss: 0.0731 - accuracy: 0.9766

P104-100

I tensorflow/core/common_runtime/gpu/gpu_device.cc:1304] CreatedTensorFlow device (/job:localhost/replica:0/task:0/device:GPU:0 with 7482 MBmemory) -> physical GPU (device: 0, name: P104-100, pci bus id:0000:07:00.0, compute capability: 6.1)

Train on 60000 samples

Epoch 1/5

60000/60000 [==============================] - 4s 68us/sample -loss: 0.2957 - accuracy: 0.9143

Epoch 2/5

60000/60000 [==============================] - 3s 56us/sample -loss: 0.1445 - accuracy: 0.9569

Epoch 3/5

60000/60000 [==============================] - 3s 58us/sample -loss: 0.1087 - accuracy: 0.9668

Epoch 4/5

60000/60000 [==============================] - 3s 57us/sample -loss: 0.0898 - accuracy: 0.9720

Epoch 5/5

60000/60000 [==============================] - 3s 58us/sample -loss: 0.0751 - accuracy: 0.9764

P104运行时使用7482 MB memory,2070 SUPER 使用6283 MB memory都是8G卡,可能2070 SUPER需要同时负责显示画面,所以需保留些显存供使用。

对比测试一跑完我就哭了。P104每个Epoch用时3s 58,2070 SUPER每个Epoch用时几乎7s。P104比2070 SUPER快了几乎2S。我的2070 SUPER,白买了。我要去退掉。

2、接着跑Keras官方文档内的1DCNN for text classification

文档显示,本相测试对照耗时

90s/epochon Intel i5 2.4Ghz CPU.

10s/epoch on Tesla K40 GPU.

2070SUPER

Epoch 1/5

25000/25000 [==============================] - 10s 418us/step -loss: 0.4080 - accuracy: 0.7949 - val_loss: 0.3058 - val_accuracy: 0.8718

Epoch 2/5

25000/25000 [==============================] - 8s 338us/step - loss:0.2318 - accuracy: 0.9061 - val_loss: 0.2809 - val_accuracy: 0.8816

Epoch 3/5

25000/25000 [==============================] - 9s 349us/step - loss:0.1663 - accuracy: 0.9359 - val_loss: 0.2596 - val_accuracy: 0.8936

Epoch 4/5

25000/25000 [==============================] - 9s 341us/step - loss:0.1094 - accuracy: 0.9607 - val_loss: 0.3009 - val_accuracy: 0.8897

Epoch 5/5

25000/25000 [==============================] - 9s 341us/step - loss:0.0752 - accuracy: 0.9736 - val_loss: 0.3365 - val_accuracy: 0.8871

P104-100

Epoch 1/5

25000/25000 [==============================] - 8s 338us/step - loss:0.4059 - accuracy: 0.7972 - val_loss: 0.2898 - val_accuracy: 0.8772

Epoch 2/5

25000/25000 [==============================] - 7s 285us/step - loss:0.2372 - accuracy: 0.9038 - val_loss: 0.2625 - val_accuracy: 0.8896

Epoch 3/5

25000/25000 [==============================] - 7s 286us/step - loss:0.1665 - accuracy: 0.9357 - val_loss: 0.3274 - val_accuracy: 0.8701

Epoch 4/5

25000/25000 [==============================] - 7s 286us/step - loss:0.1142 - accuracy: 0.9591 - val_loss: 0.3090 - val_accuracy: 0.8854

Epoch 5/5

25000/25000 [==============================] - 7s 286us/step - loss:0.0728 - accuracy: 0.9747 - val_loss: 0.3560 - val_accuracy: 0.8843

还是矿卡P104最快,两卡都比TeslaK40快。

3、最后测试Train an Auxiliary Classifier GAN (ACGAN) on the MNIST dataset.

网页显示运行每epochs耗时,Hardware BackendTime/ Epoch

CPU TF 3hrs

Titan X (maxwell) TF 4min

Titan X(maxwell) TH 7 min

跑了5 epochs测试结果如下:

2070SUPER

Epoch 1/5

600/600 [==============================] - 45s 75ms/step

Testing for epoch 1:

component | loss| generation_loss | auxiliary_loss

-----------------------------------------------------------------

generator (train) | 0.76| 0.4153 | 0.3464

generator (test) | 1.16| 1.0505 | 0.1067

discriminator (train) | 0.68| 0.2566 | 0.4189

discriminator (test) | 0.74| 0.5961 | 0.1414

Epoch 2/5

600/600 [==============================] - 37s 62ms/step

Testing for epoch 2:

component | loss| generation_loss | auxiliary_loss

-----------------------------------------------------------------

generator (train) | 1.05| 0.9965 | 0.0501

generator (test) | 0.73| 0.7147 | 0.0117

discriminator (train) | 0.85| 0.6851 | 0.1644

discriminator (test) | 0.75| 0.6933 | 0.0553

Epoch 3/5

600/600 [==============================] - 38s 64ms/step

Testing for epoch 3:

component | loss| generation_loss | auxiliary_loss

-----------------------------------------------------------------

generator (train) | 0.84| 0.8246 | 0.0174

generator (test) | 0.67| 0.6645 | 0.0030

discriminator (train) | 0.82| 0.7042 | 0.1158

discriminator (test) | 0.77| 0.7279 | 0.0374

Epoch 4/5

600/600 [==============================] - 38s 63ms/step

Testing for epoch 4:

component | loss| generation_loss | auxiliary_loss

-----------------------------------------------------------------

generator (train) | 0.81| 0.7989 | 0.0107

generator (test) | 0.66| 0.6604 | 0.0026

discriminator (train) | 0.80| 0.7068 | 0.0938

discriminator (test) | 0.74| 0.7047 | 0.0303

Epoch 5/5

600/600 [==============================] - 38s 64ms/step

Testing for epoch 5:

component | loss| generation_loss | auxiliary_loss

-----------------------------------------------------------------

generator (train) | 0.80| 0.7890 | 0.0083

generator (test) | 0.64| 0.6388 | 0.0021

discriminator (train) | 0.79| 0.7049 | 0.0807

discriminator (test) | 0.73| 0.7056 | 0.0266

P104-100

Epoch 1/5

600/600 [==============================] - 63s 105ms/step

Testing for epoch 1:

component | loss| generation_loss | auxiliary_loss

-----------------------------------------------------------------

generator (train) | 0.79| 0.4320 | 0.3590

generator (test) | 0.88| 0.8000 | 0.0802

discriminator (train) | 0.68| 0.2604 | 0.4182

discriminator (test) | 0.72| 0.5822 | 0.1380

Epoch 2/5

600/600 [==============================] - 59s 98ms/step

Testing for epoch 2:

component | loss| generation_loss | auxiliary_loss

-----------------------------------------------------------------

generator (train) | 1.02| 0.9747 | 0.0450

generator (test) | 0.79| 0.7753 | 0.0165

discriminator (train) | 0.85| 0.6859 | 0.1629

discriminator (test) | 0.77| 0.7168 | 0.0576

Epoch 3/5

600/600 [==============================] - 59s 98ms/step

Testing for epoch 3:

component | loss| generation_loss | auxiliary_loss

-----------------------------------------------------------------

generator (train) | 0.84| 0.8263 | 0.0170

generator (test) | 0.64| 0.6360 | 0.0042

discriminator (train) | 0.82| 0.7062 | 0.1157

discriminator (test) | 0.77| 0.7353 | 0.0384

Epoch 4/5

600/600 [==============================] - 58s 97ms/step

Testing for epoch 4:

component | loss| generation_loss | auxiliary_loss

-----------------------------------------------------------------

generator (train) | 0.82| 0.8036 | 0.0115

generator (test) | 0.69| 0.6850 | 0.0019

discriminator (train) | 0.80| 0.7054 | 0.0933

discriminator (test) | 0.75| 0.7165 | 0.0301

Epoch 5/5

600/600 [==============================] - 58s 97ms/step

Testing for epoch 5:

component | loss| generation_loss | auxiliary_loss

-----------------------------------------------------------------

generator (train) | 0.80| 0.7904 | 0.0087

generator (test) | 0.64| 0.6400 | 0.0028

discriminator (train) | 0.79| 0.7046 | 0.0806

discriminator (test) | 0.74| 0.7152 | 0.0272

这回2070SUPER终于耗时比P104-100少了。这张新卡暂时不用退了。

总结

如果个人学习使用,矿卡P104-100魔改8G版本性价比不错,可以购买。

Shinji1701

校验提示文案

Alygunia

校验提示文案

Polex

校验提示文案

这个应该海星

校验提示文案

tmfc

校验提示文案

走猫

校验提示文案

值友1127741177

校验提示文案

mac100

校验提示文案

米兹头

校验提示文案

辰冰星

校验提示文案

Au3C2

校验提示文案

不听流行的人

校验提示文案

值友4857036584

校验提示文案

Monsters

校验提示文案

M易大师

校验提示文案

tutugreen

校验提示文案

慕海仁

校验提示文案

慕海仁

校验提示文案

九万

我是用同样的模型跑目标检测, 同样的图片,单张检测速度, 2060比p104快不少

校验提示文案

天津飞越

校验提示文案

康夫和强强

校验提示文案

hrbagang

校验提示文案

天津飞越

校验提示文案

九万

我是用同样的模型跑目标检测, 同样的图片,单张检测速度, 2060比p104快不少

校验提示文案

慕海仁

校验提示文案

慕海仁

校验提示文案

tutugreen

校验提示文案

这个应该海星

校验提示文案

M易大师

校验提示文案

Polex

校验提示文案

Monsters

校验提示文案

Alygunia

校验提示文案

值友4857036584

校验提示文案

不听流行的人

校验提示文案

Au3C2

校验提示文案

辰冰星

校验提示文案

Shinji1701

校验提示文案

米兹头

校验提示文案

mac100

校验提示文案

值友1127741177

校验提示文案